How to Deploy React App to S3 Bucket

One simple approach I often rely on to deploy my static frontend application is a storage service and with AWS, AWS S3.

In this article, I provide a step by step guide on how to automate the deployment of your static files to AWS S3 leveraging Gitlab's CI/CD pipelines, and with git tags as deploy triggers.

We start by creating an S3 bucket, after which we prepare Gitlab with AWS credentials, write the pipeline and finally, test the setup end to end by pushing a git tag which will trigger the pipeline and deploy our static files to our AWS S3 bucket.

The approach chosen in this article is a generic approach to share a concept which can be applied irrespective of your project or language domain.

Requirements

Setup AWS S3 bucket and access

Step 1:

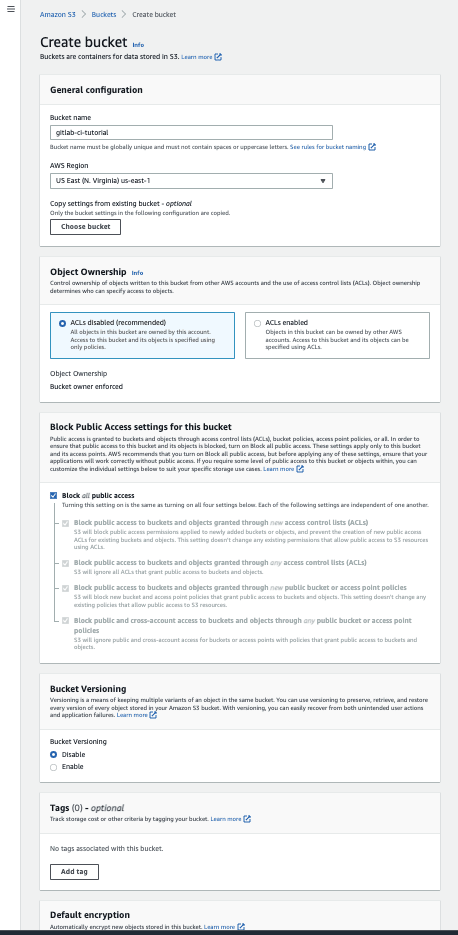

Go to AWS S3 to create a bucket for storing the static files by clicking on the create bucket button.

Step 2:

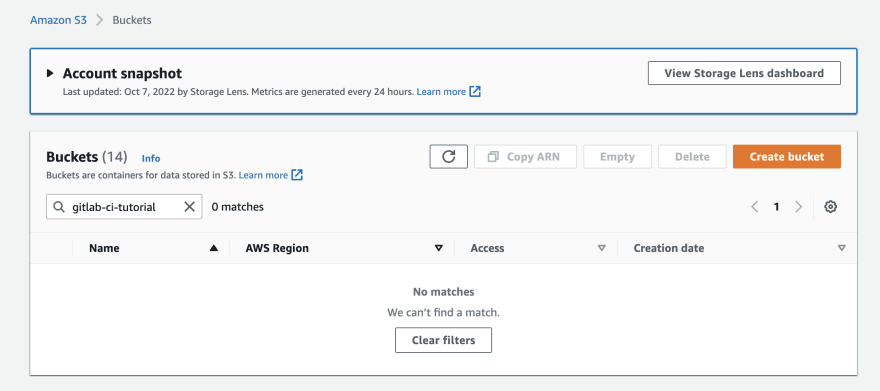

In the form provided, enter your bucket name.

For this guide, we will use the bucket name gitlab-ci-tutorial.

Now, select a region of your choice (us-east-1), leave everything else as is and click on create bucket.

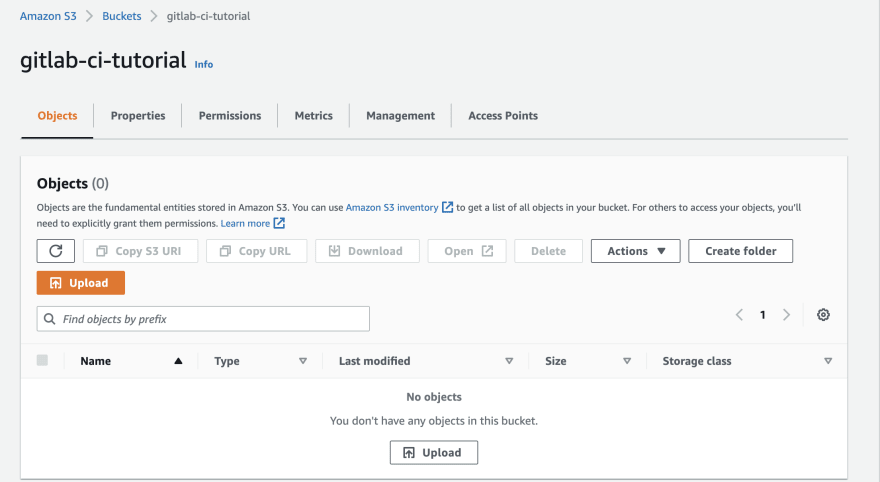

Notice that the created bucket is empty.

Step 3:

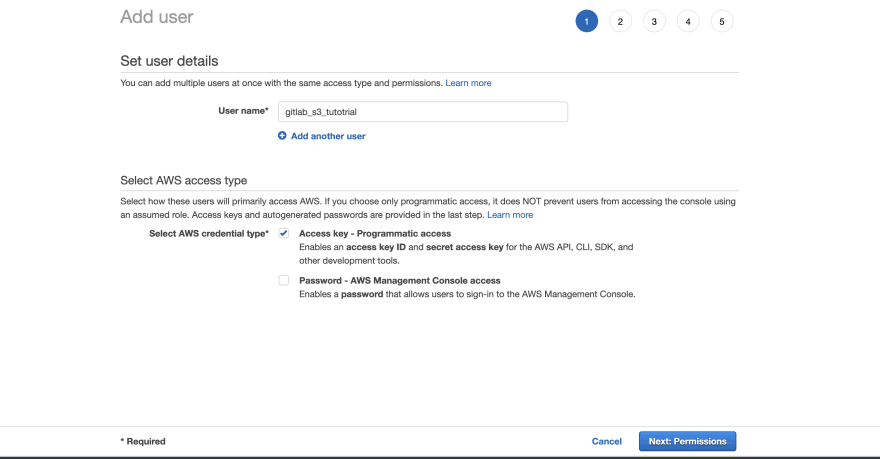

Go to AWS IAM to create a user with programatic access to S3.

Click on Add users.

Step 4:

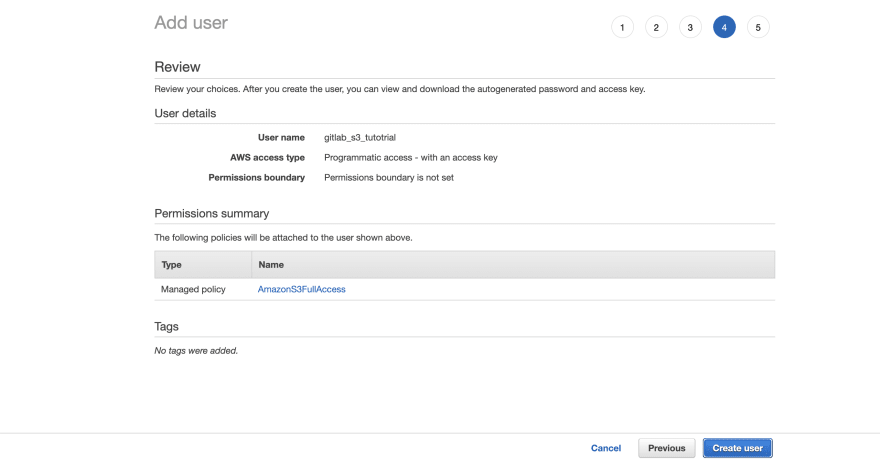

Following the steps in the form provided, we create a user that will be used from Gitlab to access/deploy files to our S3 bucket.

- Provide a name for the user,

gitlab_s3_tutotrialand check theAccess key - Programmatic accesscheckbox.

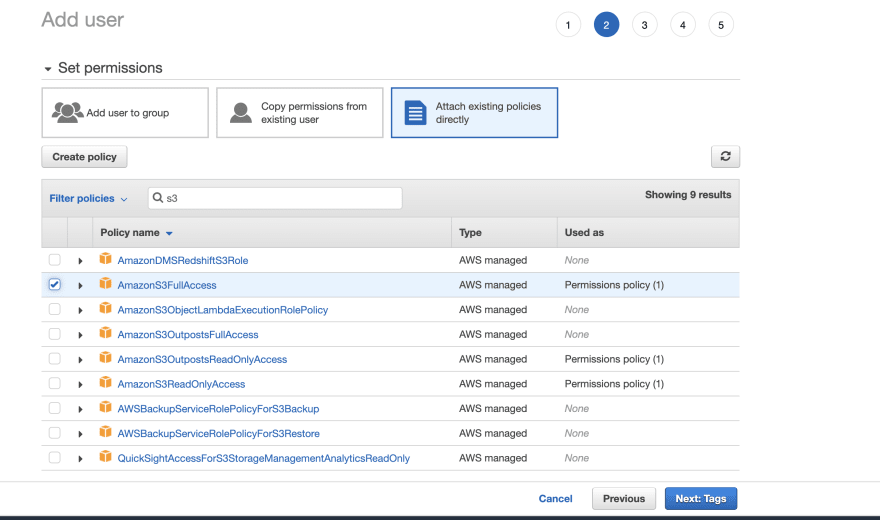

- For permissions, we will attach an existing policy for full S3 access

AmazonS3FullAccessin this guide. It is recommended to create a more fine grained access based on your project requirements.

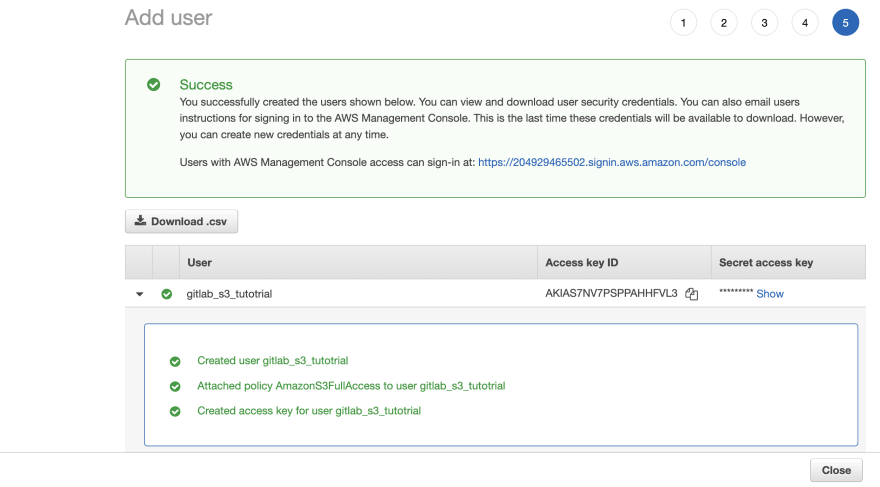

Step 5:

Review and create user.

Prepare Gitlab

Step 1:

Create your project on Gitlab. I created a project gitlab-ci-tutorial1

Step 2:

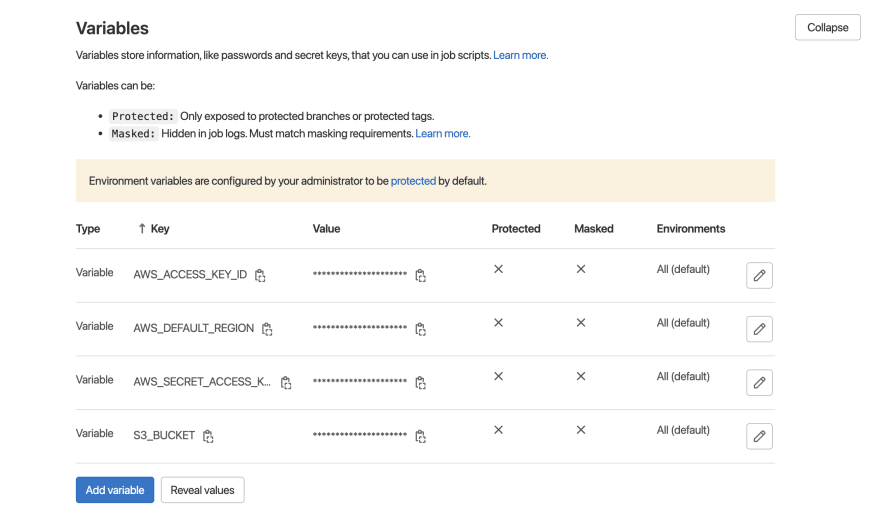

Within the created project, navigate to settings > CI/CD and expand the variables section.

Add the following environment variables which will be used for deployment to AWS S3 as created in step 5 Setup AWS S3 bucket and access > step 5 above

- AWS_ACCESS_KEY_ID:

<Your Access key ID> - AWS_SECRET_ACCESS_KEY:

<Your secret access key> - AWS_DEFAULT_REGION:

us-east-1(as chosen in steps above) - S3_BUCKET:

gitlab-ci-tutorial(Name of your bucket)

Write the pipeline

Now, we are ready to create the pipeline and this is done using a .gitlab-ci.yml file at the root of the project.

Whenever a push is made to Gitlab, this file will be detected and the pipeline will run. Though, in this case we want the pipeline to only deploy when we push a new tag.

Create a .gitlab-ci.yml file and add the contents below to the file.

stages:

- build

- deploy

build:

stage: build

script:

# We simulate a build phase by manually creating files in a build folder

# An example would be using `yarn build` to build a react project that would create static files in a build or public folder

- mkdir -p build && touch build/build.html build/build.txt

artifacts:

when: on_success

paths:

- build/

expire_in: 20 mins

deploy:

stage: deploy

needs:

- build

image: registry.gitlab.com/gitlab-org/cloud-deploy/aws-base:latest

rules:

- if: $CI_COMMIT_TAG # Run this job when a tag is created

script:

- echo "Running deploy"

- aws s3 cp ./build s3://$S3_BUCKET/ --recursive

- echo "Deployment successful"Enter fullscreen mode Exit fullscreen mode

-

In the pipeline above, we made use of stages and passed artifacts (outputs) from one stage to another.

-

The

buildstage was written to mimic an actual build of a project which will then create output files in a build folder. Here, we created a build folder containing 2 files with shell commands. -

The image used in the

deployphase aws-base contains the aws CLI command necessary to run the aws deploy script and by default, detects the AWS environment variables with the exact names we added to the variables section of Gitlab earlier.

For a full description of the configuration options of the .gitlab-ci.yml file, check here.

Now, commit the file to the repo and push to the project we created earlier.

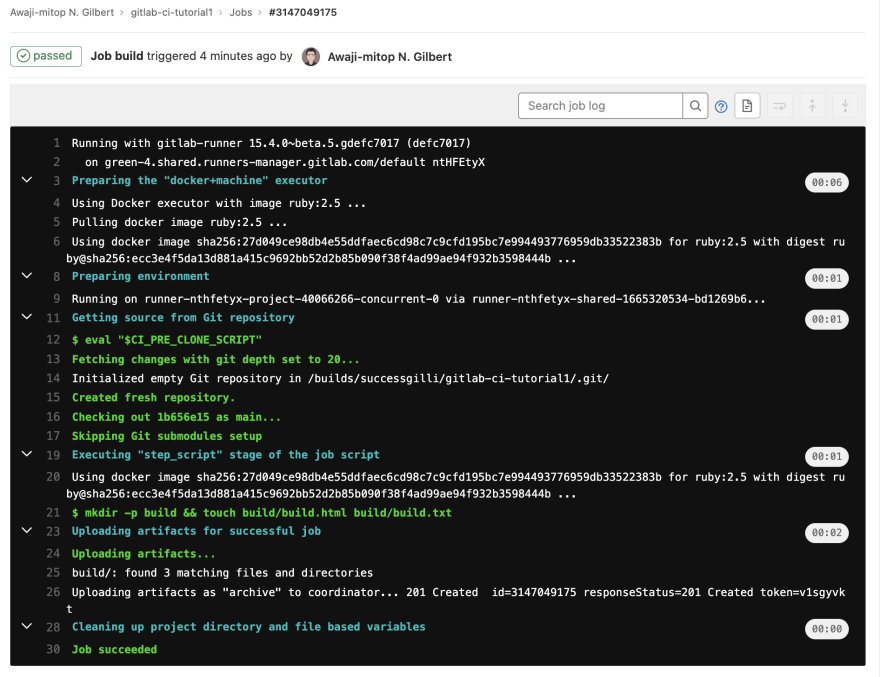

Notice in the images below that on the Gitlab project, only the build job was run.

This is because of the line - if: $CI_COMMIT_TAG in the rules section of the deploy phase which will ensure that the deployment is only run when we push a new tag or create it on the Gitlab UI. Also, see here Trigger pipeline with git tags.

Test the overall setup

Step 1:

Tag your commit.

git tag 'V1'Enter fullscreen mode Exit fullscreen mode

Step 2:

Push your tag.

git push origin 'V1'Enter fullscreen mode Exit fullscreen mode

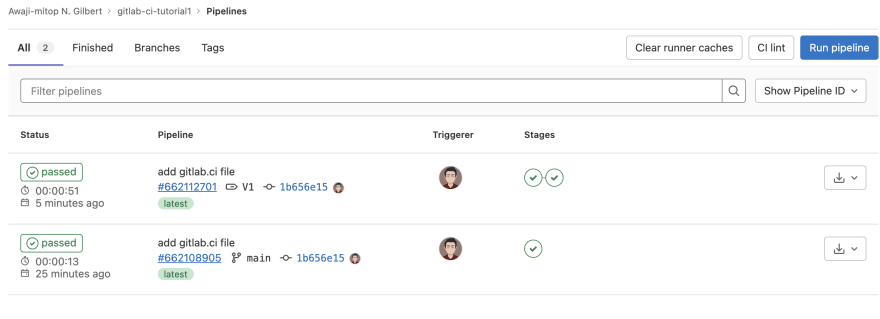

Notice, we now have a new pipeline at the top that ran 2 jobs, the build and deploy jobs.

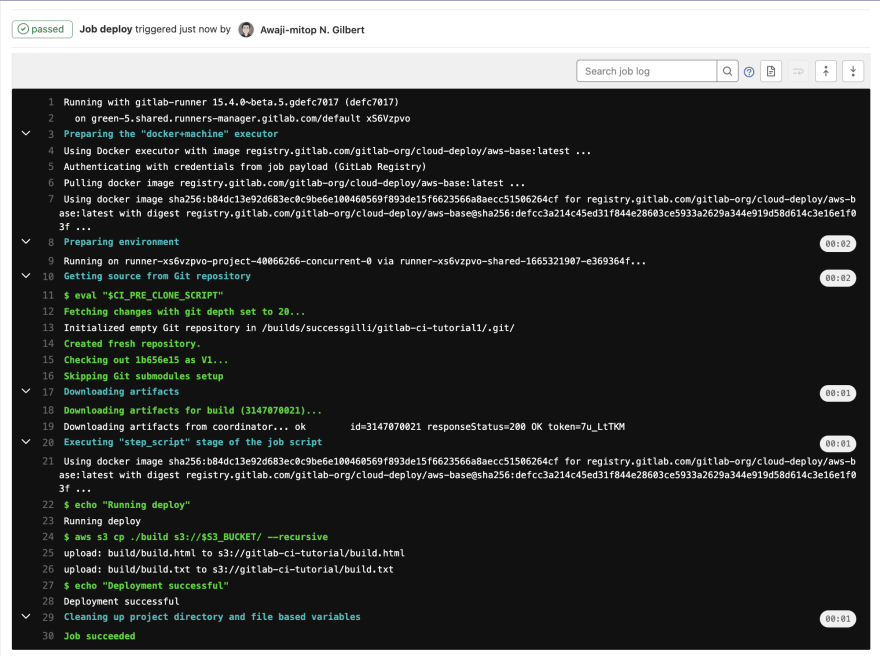

Below is the completed deploy job.

Step 3:

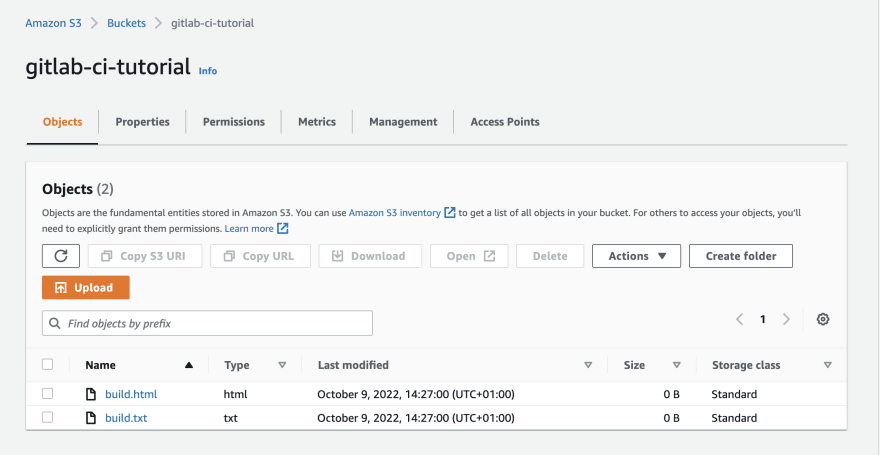

Check the bucket on AWS S3

Refresh and notice that the S3 bucket is no longer empty and contains the files built and deployed from our pipeline as seen below.

Congratulations, we did it! 🎉

Conclusion

There are different ways to deploy code with the Gitlab CI/CD pipeline. This approach with git tags is one common approach used with npm packages and most organisations I have worked with and brings the advantage of easy rollback amongst many.